Giving Voice Agents Memory

Past few weeks were occupied with working on voice agents behind streaming avatars. Still Stealth, TBA, Under Wraps, etc etc.

It was pretty straightforward to start with: build a STT -> LLM -> TTS loop, build phonemes, stream responses to avatars. Other than the onboarding data which is already expansive, save each interaction in a timestamped format. Then for each query to LLMs, send the persona and interactions.

This worked for a while and it still does. But in longer conversations, we started noticing issues like information from previous conversations being lost, Fitness Coaches responding confidently to questions about Quantum Computing, and avatars drifting from conversation flow etc.

These are all the standard problems that come with genai conversational interfaces, so (we assumed) that there should be a single cohesive solution out there, but the ecosystem is fragmented and it's difficult to pick a single winner.

Hence, Listing down the observations and landscape exploration so far.

The Problems

Problem 1: The Linear Context

Our naive implementation sent the entire conversation history with every request, it was bound to fail but it was easy to implement and it worked for a while.

// The exponential cost curve of stateless memoryToken usage: N * (N+1) / 2 // Every message requires all previous messagesCost per session: O(N²) // Quadratic growthLatency: 50ms * N // Linear degradationContext limit: 128k tokens // Hard ceiling = ~100 conversationsThis approach still has legs if the application is more or less stateless. Like short-lived chatbots, email agents, etc.

But if the goal is to replace human interactions, then being able to recall something which was discussed in previous interactions adds a lot of value.

Problem 2: Conversation Drift

Even within context limits, avatars tend to drift from conversation flow. A fitness coach would start every conversation with "About your marathon training..." even when asked about dinner.

Problem 3: Hallucinating Memories

The most annoying one tbh:

User: "What did I tell you about my sister?"Avatar: "Your sister Sarah is turning 30 next month!"User: "I never mentioned a sister."And kinda expected because there was no blacklist terms, no guardrails, we are dumping all the content and hoping the LLM will be able to reason about it.

The Solutions (WIP)

Semantic Memory Extraction: Low hanging fruit. Instead of storing raw conversations, extract and compress facts. "I'm John from SF working on a startup" -> name, location, occupation. This achieves significant token reduction but loses conversational nuance.

Temporal Knowledge Graphs: Model memory as evolving relationships between entities over time. When facts change ("I moved to NYC"), the old fact isn't deleted but marked temporally invalid. This preserves the history of change; critical for maintaining context.

Hybrid Retrieval Systems: Combine semantic search over extracted facts with recent raw conversation retrieval. Query-time selection of relevant memories instead of sending everything.

Better Observability:

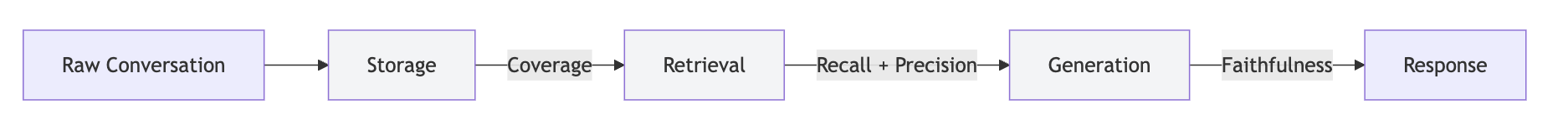

As always, it starts with, you can't fix what you can't measure. Instead of treating memory as one black box, which was resulting in failures compounding at each stage. We need to identify if we have low recall or low faithfulness. i.e., if we are not able to recall the memories or we are not able to generate grounded responses.

So instead of end-to-end testing ("did it work?"), we needed component-level metrics ("where did it break?"). Then fix that specific part.

Frameworks We Tested

Mem0:

// Option 1: Mem0 - Aggressive fact extractionimport { Memory } from 'mem0ai';

const m = new Memory({userId: "avatar_123",llm: { provider: "openai", model: "gpt-4" }});

// Automatically extracts and stores factsawait m.add("I'm John from SF, working on a fintech startup");// Stores: {name: "John", location: "SF", occupation: "fintech founder"}

// Retrieval is semantic - finds relevant factsconst memories = await m.search("What's my background?");// Returns compressed facts, not original text

// Problem: Loses conversational nuance// "I hate mornings" becomes {preference: "dislikes mornings"}// The personality is goneGraphiti (OS from Zep):

Succinct knowledge graph explainer from graphiti

# Option 2: Graphiti - Temporal knowledge graphs (Python only!)from graphiti_core import Graphiti

# Runs completely standalone - no cloud service neededgraphiti = Graphiti( neo4j_uri="bolt://localhost:7687", neo4j_user="neo4j", neo4j_password="password")

# Stores BOTH raw conversation and extracted factsawait graphiti.add_episode( name="marathon_training", episode_body="User is training for a marathon in 3 months", source_description="conversation")

# Retrieval includes temporal contextresults = await graphiti.search( query="fitness goals", num_results=10)

# Returns:# - Facts as knowledge graph nodes# - Original episodes (conversation chunks)# - Temporal relationships between factsGraphiti (afaik) is Python-only, which might be a problem, running a parallel python service just for memory might be a bit of a pain, but The temporal knowledge graphs might be compelling enough to justify the added complexity over pure TypeScript solutions.

Mem0 seems like a solid deal so far for Node.js.

Hoping to improve on these metrics after implementation:

- token cost - obv. semantic extraction should help compared to sending everything

- p95 latency (down from 2-3s) - combined with above. less tokens to process so faster responses

- Zero re-introductions - Ideally.

- some reduction in hallucinations - grounded responses but more on this below.

Open Question

There's one thing we haven't 100% fixed: teaching avatars to say "I don't know."

User: "What did I tell you about my sister's birthday?"Avatar (no sister in memory): "Your sister's birthday is next month!"User: "I never mentioned a sister."Even with sophisticated retrieval, when there's nothing to retrieve, the LLM makes things up. We can't reliably distinguish between:

- Things never mentioned

- Things mentioned but not retrieved

- Things the system failed to store

Better observability will help with detection and adding post inference checks:

- Retrieval Score < y: Semantic similarity between query and retrieved memories is low (force "I don't recall")

- Faithfulness < x: Response contradicts retrieved facts (definite hallucination)

- Empty Retrieval Set: Zero memories found for query (legitimate "I've never heard about this")

But forcing the right behavior 100% of the time is still an open problem. The models want to be helpful so badly they'll invent memories rather than disappoint.

If you've cracked this for conversational AI, please reach out. Seriously.